A series of four papers published between November 2019 and October 2020 by Dr Chao Chen and his team from the Department of Mechanical and Aerospace Engineering and Laboratory of Motion Generation and Analysis from Monash University (Victoria, Australia; www.monash.edu) describe the step-by-step process of developing the vision and robotic guidance system required to successfully develop an automated apple harvester.

Autonomous crop harvesting technology development represents an example of how robotics and machine vision systems complement one another. The vision side requires development and refinement of image recognition algorithms to determine the location of fruit and the orientation of the fruit relative to the camera. This information can generate 3D images to guide the movement of the robot arm and a gripper to pick the crops.

If an autonomous crop harvesting system utilizes deep learning, it often involves a system of neural network (NN) models running sequentially and/or in parallel with each model focusing on a different task. The ability to study in detail the development of such a system highlights the nature of these challenges and elucidates the keys to success.

In their first paper, “Fast implementation of real-time fruit detection in apple orchards using deep learning (bit.ly/VSD-FSTIMP),” researchers describe the creation of an auto label generation model and a deep learning-based fruit detector called LedNet.

A neural network needs to see many images of an object, in this case apples, to learn what the object looks like. The learning process requires labeled images of that object. Typically, a human would draw a bounding box around an apple in an image and then label the contents inside the bounding box as “apple.” When shown hundreds or thousands of these annotated images, the NN can eventually learn how to identify apples in fresh images. (Figure 1)

The system developed by the researchers at Monash University includes a traditional feature-based object recognition algorithm that assists in the automated production of annotated images. This auto label generation model requires a small number of images for training according to Dr. Chao Chen, Director of the Laboratory of Motion Generation and Analysis at Monash University.

When shown a fresh image, the model creates a Region of Interest (ROI) around each portion of the image believed to be an apple. All ROIs believed to contain an apple are assigned the class foreground and the remainder of the image is classified background.

The model then determines the center point of each apple by looking for connected, matching pixels by color and other attributes and uses that center point for reference to draw a bounding box around the apple. Finally, these ROIs run through a ResNet NN trained to identify apples to identify false positives, resulting in annotated images used for model training.

To train the deep learning-based LedNet fruit detector model, researchers collected 800 images from an orchard in Qingdao, China, using the Microsoft (Redmond, WA, USA; www.microsoft.com) Kinect-v2 camera. They also collected 300 images of apples in scenes other than orchards and 100 images that did not have any apples in them. They selected 800 images from this total dataset for training.

Data augmentation of the training images included reduction into 1/4, 1/8, and 1/16 size versions; rotating and flipping; and brightness, contrast, and saturation adjustment to teach LedNet how to identify apples at different sizes, orientations, and under varied lighting conditions.

In their second paper, “Fruit detection, segmentation and 3D visualization of environments in apple orchards (bit.ly/VSD-FRTDTC),” researchers describe the system’s refinement, adding the ability to detect tree branches by creating a specific NN model for the purpose. Analyzing the geometric properties of each apple and determining the pose of the apple relative to the camera requires depth information. Therefore, the researchers incorporated an Intel (Santa Clara, CA, USA; www.intel.com) RealSense D-435 camera along with a Logitech (Lausanne, Switzerland; www.logitech.com) webcam-C615.

Where the first iteration of the system broke the apples down into image classifications—foreground and background—and then identified apples within the foreground class, the second iteration of the system includes an instance segmentation algorithm to also segment each object, i.e. each apple, within the class. This allows the system to calculate individual robot pathing for each separate apple.

In their third paper, “Visual perception and modeling for autonomous apple harvesting (bit.ly/VSD-VISPCT),” researchers describe a third iteration of the fruit detection and 3D modeling algorithms and the creation of a new robot control framework.

The system tested with a UR5 robotic arm by Universal Robots (Odense, Denmark; www.universal-robots.com) fitted with a custom-designed end-effector; an Intel RealSense D-435 RGB-D camera; a computer running the Ubuntu 16.04 LTS operating system with versions featuring an NVIDIA (Santa Clara, CA, USA; www.nvidia.com) GTX-1080Ti GPU or Jeston-X2 module; and an Arduino Mega-2560 PLC.

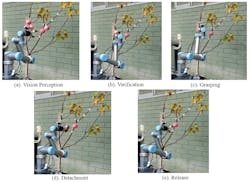

Three programming steps or blocks enable the robot to make the pick. A vision processing block receives color and depth information from the RealSense camera to identify the location and pose of the fruit. This information transmits through the PLC to a robot controller running the Kinetic version of the Robot Operating System (ROS) and a manipulator block controls the robot arm. Finally, a grasping block controls the end-effector to grasp and then release the fruit.(Figure 2)

The fruit identification algorithms were tested during a laboratory experiment with simulated apple trees and in a simulation using images gathered from the orchard in Qingdao. The system scored its highest accuracy ratings for center estimation and pose computation for fruit between 0.3 and 0.5 m away from the camera. This allowed the researchers to determine optimal camera position before attempting to make a pick with the robot arm.

Finally, an autonomous harvesting experiment ran on the simulated apple trees in the lab. Once the researchers developed a system that accounted for apples obscured by branches or leaves, when the apples were clearly separated on the branches, the success rate was 91%; if the apples were partially obscured the success rate was 81%.

In the fourth paper, “Real-Time fruit recognition and grasping estimation and robotic apple harvesting (bit.ly/VSD-RTFRR),” researchers describe the creation of an entirely new algorithm based on the previous fruit detection models that combines detection, 3D modeling, and determining the optimal position for the robot arm. The new system incorporates an algorithm based on the PointNet network that performs classification, segmentation, and other tasks on 3D point clouds produced by the D-435 RealSense camera.

The same UR5 robot arm and Intel RealSense D-435 deployed on a customized four-wheeled vehicle. (Figure 3) The system runs on a Dell (Round Rock, TX, USA; www.dell.com) Inspiron PC with Intel i7-6700 CPU and NVIDIA GT-1070 GPU, again running the Kinetic version of ROS and Linux Ubuntu 16.04. A RealSense communication package connects the camera, robot, and PC.

The fruit recognition system tested on image data as in the prior experiments. The robotic harvester tested in indoor and outdoor environments on real apple trees. The RealSense camera mounted on the UR5 robot. For indoor tests, the system demonstrated an 88% success rate for apple position and pose prediction and an 85% harvesting success rate. In outdoor tests, the system demonstrated an 83% success rate for apple position and pose prediction and 80% harvesting success rate.

In the conclusion for this final study, researchers state that future work includes further optimization of the vision algorithm to increase accuracy, robustness, and speed and optimization of the custom end-effector for the robot arm to increase its dexterity and ability to successfully grasp apples in challenging poses.